While virtualization has been here for more than 30 years, it had a larger outreach over the last decade with top technological companies having their own hypervisors, VMware, Microsoft for example. With emergence of multi-core processors and increasingly dense memory, workloads have grown tremendously thus paving way to a number of hypervisors and cloud technologies. One of the biggest benefits of server virtualization is migration from physical servers to virtual machines thus having fewer number of physical servers in an infrastructure which means that there are less hardware resources in an IT environment of a business. When it comes to backup, a solution must be intelligent and comprehensive enough to handle the workloads of any hypervisor. While IT managers appreciate the evolution of virtualization backup, we spoke to few virtualization experts on what the future holds for server virtualization backup.

David Marshall (vExpert, 2009 – 2017 & Owner of vmblog.com)

As we enter 2018, we’ve witnessed skyrocketing valuations on the stock and cryptocurrency markets. But its data that’s going to be the key currency to watch this year. Amidst a growing flood of data, ransomware and evolving threats, organizations will continue to contend with data challenges and unplanned downtime. And because of that, 2018 will be the year of zero tolerance for data loss.

If I’ve learned anything about server virtualization over the last 18 years, it’s that the technology doesn’t rest on its laurels. Server virtualization evolves, matures, expands and creates, and the same will happen by proxy with server virtualization backup. Virtualization has changed so much in IT, and along the way, it’s increased the number of options made available to the backup industry.

As companies continue to move legacy IT towards a more modern, software-defined datacenter environment with server virtualization, cloud computing, and containers, companies will need to modernize their data protection strategies to include more modern backup and recovery approaches.

Watch for technologies like blockchain to gain use in securing data storage as it provides global authenticity and security for data and transactions of any kind, reducing cost and complexity and making data “tamper-proof.” And then sit back and watch as machine learning, artificial intelligence and predictive analytics take over and make backup administrators faster and more productive at their jobs. Systems will become smart enough to know which versions of files and application recovery points to roll back to after an attack. AI will leverage predictive learning algorithms and automatically perform proactive recoveries, eliminating outages even before an end user can detect them.

One thing is for certain, server virtualization backup has plenty of room for growth and expansion. And this is going to be a fun space to watch in 2018 and beyond.

Brandon Lee (IT technologist and Owner at virtualizationhowto.com)

“There is no doubt the landscape of server virtualization backup will continue to morph and evolve in the future. New business-driven initiatives to align with compliance such as the new GDPR compliance regulations will no doubt drive how businesses look at the data contained in backups. There will be added need for near continuous data protection as organizations become more and more data driven from every perspective. Server virtualization backup solutions of both today and tomorrow will be looking at more effective and efficient ways to capture data changes. As newer technologies such as containers become more mainstream, businesses will be looking to their data protection solutions to evolve with these new technologies and be able to protect these new workloads. Software defined architectures will continue to gain momentum meaning data protection solutions will need to have tight integration with vendor solutions and technologies. The continued migration to cloud based architectures will also mean that data and workloads can live anywhere – both on premise and in the cloud. This will continue to drive the need for data protection solutions to provide the tooling and integrations needed to protect workloads wherever they may live in multi-cloud hybrid environments. It will be exciting to see how these and other yet unforeseen technologies change the landscape of server virtualization backup over the next few years!”

Prigent Nicholas (MVP & Owner of get-cmd.com)

The amount of data for every company is growing at a very higher rate every day. It means that backup is essential. IT professional must be prepared at any cost because losing data can be irreplaceable! One important point to take into consideration, is to simplify your backup management. It means that you should define a backup strategy for the next years. If you work with server virtualization, then the main goal is to make backing up and restoring individual virtual machines straightforward. In my opinion, a robust virtualization project should include a robust and simple backup solution.

If you are implementing a backup solution in virtualized environment, so you should take a look at hyper-converged infrastructure systems because hyper-converged infrastructure can be interesting for IT professional. It integrates compute, networking, and storage via virtualization. It also means that hyper-converged solution integrates backups into its design, rather than treating it as an add on. This is one reason that hyper-converged infrastructure has been increasingly popular until now but also for the next years.

If you can’t implement hyper-converged solutions for the next years, I would advise you to take a look at the following option: Azure.

Businesses do not want to spend time and energy managing backup infrastructure as was done in the past. Companies must conserve resources for the things that add value for the business. Take a look at Azure Backup:

https://www.bdrsuite.com/blog/getting-started-with-azure-backup/

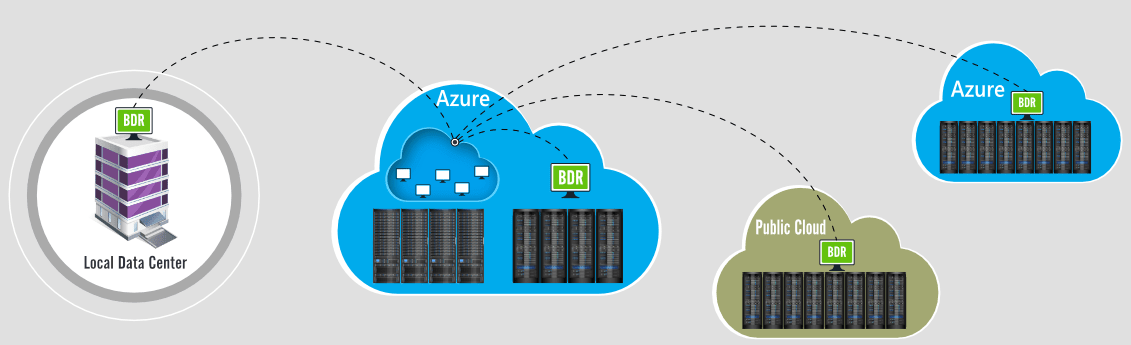

Azure can be very interesting in a full virtualized environment because you can easily replicate your virtual machines to Azure. You could also consider to use Microsoft Azure as an extension (or maybe even a replacement) of your data centers. Azure is growing every day and Azure backup is one of the big advantage to jump into the cloud. On top of that, several backup solutions allow you to backup your on-premise virtual machines to/from the cloud. Another advantage is the ability to use Azure as your IT disaster recovery site, which must be defined in your backup strategy.

Kanishk Sethi (vExpert and owner of Let’s Virtualize)

As per the IDC report there are more than 20 Millions IT professionals worldwide and this number is expected to grow by 26 million by 2020, question here is about the amount of data that these IT professional will bring in by 2020 which is expected to explode to 1200 GB.

Because of this and productivity as one another reason the Hyper-converged infrastructure and more importantly hyper-converged storage solutions and backup are becoming popular day by day and the future of backup lies in hyper-convergence as well.

Looking into the future we need to ensure that the backup server is equipped with additional functionalities to take advantage of more processors, memory and I/O and at the same time it provides us more capabilities including High Availability.

If we look at the core functions of any backup software that provides data protection, deduplication, replication and recovery needs to be expanded considering the latest trends and increase in the overall demand for backup.

Preetam Zare (18+ years exp in ICT, owner of vzare.com)

Data Growth a Unique Challenge

Going by the Current trends, two things will remain constant for foreseeable future

- Data growth will continue to rise exponentially.

- Vulnerabilities will be continued to be exploited.

The one of the biggest challenge any organization faces is

- Where the data is residing?

- Whether the data needs to back up?

- How frequently that data is changing?

- How long data needs to be retained?

No point denying the fact that all backup product is designed around these principles. It is more to do with how data is ingested and in at what rate. The dynamic has changed as we have found more stable solution on managing Big data.

How often we have seen when it comes restore we face below challenges

- Data restore is of little value to business

- Data restored do not bring service online immediately still needs numerous configuration changes.

These challenges are arising from the fact that data is growing 10 times faster rate than it was a couple of years back. The primary reason is data is injected from

- Apps (Mobile)

- Internet of Things (Smart Home, SmartWatch and Smart etc)

- Machine learning

- Artificial intelligence

As data grows classification of data must be done to segregate it from mission-critical data, critical data and not so critical data. Data classifications will be driven by organizational policies. The most relevant example would be GDPR. The GDPR policies, especially around where is data is residing, is data classified or tagged and tracked its growth within the enterprise. The other requirements of GDPR is the ability to extract data anywhere and check the validity of the data.

Considering so many significant requirements, backup of data right from application stack to database stack going to very difficult to address. We know backup products are designed for backing up data but most of the product lacks capability of

- Tagging the data

- Identifying the location of data

- Ability to extract the data based on demand and under control fashion

- Provide control restore using Role-based access control.

Though today we are focusing backing up OS, Database, Application configuration using image-based backup, tomorrow (and very near future) we need a solution to take data from IoTs. These data must be backed up securely. This is only from the backup perspective, but restore should be quick as possible irrespective where the data is stored.

Data Protection and Orchestration

With cloud repositories rising, which are spread across geographies customer need to prepare for nearline recovery especially in the event of the cyber attack which well all now is happening every hour. This demands backup product evolve from restore perspective in a big way. Data is backed up every second and it should be available for restore every other second. Integration with OS, Application, and IoT needs to more tight to allow it faster recovery.

Back Up Product

Windows 10 we all know is updated every other month and recently Microsoft took the same approach for Windows Server 2016. This only complicates the service uptime and its integration and dependencies. It is crystal clear we need every changing DevOps style strategy for the backup product as well. It is like every time our data is corrupted we invoke Checksum mechanism, on the similar line we need checksum mechanism to review data and any changes in the data should be authorized for backup.

Today this is not happening at seconds level. Data is growing every second, at the same rate we need to backup data. We will need a different approach for this. I can think of Artificial intelligence to track such data changes and invoke backup in a sophisticated way without impacting VM performance. Existing snapshot technologies are inadequate to achieve this level of data protection. DevOps approach should integrate data protection inherently in their design. Every time feature is added to the product, its purpose, its data injection rate and critical of data is must be reviewed and added to the backup cycle.

CISO and CIO Involvement

As growth and value of data are rapidly growing the challenge in addressing data protection would need the blessing from CISO and CIO. These would need policy enhancement of data protection around security, restoration and no of copies to be created for the data. In the age of Apps, presentation layer and database layer are different and independent. In this scenario where data is persistent and how long it will remain on the servers needs thorough reviewing. These decisions directly impact your backup policies.

Backup Product has the long way to go but this long way is to evolve to address current data growth challenges and protect them dynamically. Backup product designed for today’s problem cannot address tomorrow challenges. It needs to look beyond server, OS, Application. It needs orchestration and part of DevOps cycle.

Conclusion:

Based on the inputs received from the experts, it is understood that the objective of the virtualization is to ensure business continuity for any organization. An optimal backup solution would take care of VMware Backup, Microsoft Hyper-V Backup and also specific use-cases which in other words, serve as a comprehensive solution for all requirements of a data center.

Experience modern data protection with this latest Vembu BDR Suite v.3.9.0 FREE edition. Try the 30 days free trial here: https://www.bdrsuite.com/vembu-bdr-suite-download/

Got questions? Email us at: vembu-support@vembu.com for answers.

Follow our Twitter and Facebook feeds for new releases, updates, insightful posts and more.