CPU Rightsizing for vSphere hosts

Sizing a server can be tricky, especially when it comes to processors. The manufacturers offer such a wide variety of hardware that it can be confusing to know which one to pick and more importantly why.

Without going too deep into how to pick a CPU, there are a few technical and financial aspects to consider that can help you make a choice you won’t regret in 2 years.

Number of physical cores

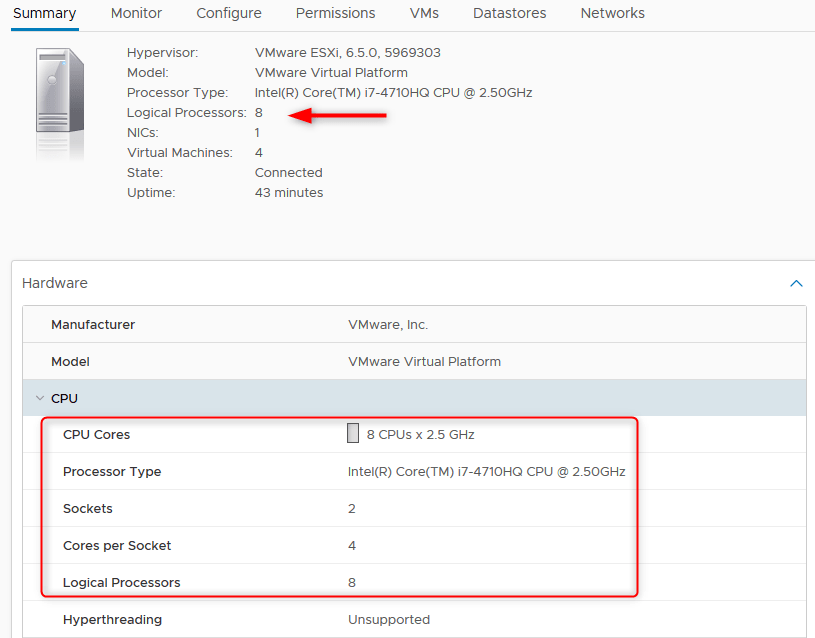

I did specify physical cores in the title as you will see in the summary of a host in the vSphere client that it mentions Logical cores which reflects the hyper-threaded count. Though if you expand the hardware tab you get the physical core count per CPU socket.

In the following picture, you can see that the host has 8 physical cores (4 cores x 2 sockets). Because my ESXi is nested, hyper-threading is disabled so logical cores are 8 as well but you get the drill.

The GHz used are not the only thing to look out for when looking for a CPU, the core count is a very important metric in your sizing, especially when dealing with highly concurrent workloads like virtualization.

When executing CPU instructions, the VMs are scheduled by ESXi’s scheduler to get a slice of the hardware. If the VM has 2 virtual cores, ESXi will have to find 2 cores available to schedule it. If too many virtual cores are asking for a slice of the CPU at the same time, the scheduler will have to queue them and delay the operations that can’t be satisfied immediately which results in CPU ready issues.

You can find the CPU ready of your VMs in esxtop, press C to display the CPU metrics and look for %RDY. The value should never go above 10%.

In order to prevent this issue from happening one needs to try and keep the vCPU : pCore ratio to a sensible value. This ratio is represented by the sum of the cores provisioned on your running VMs in regard to the physical core count of your host.

You can use this snippet in PowerCLI to display this info of your environment:

$VMcore = $vHost | Get-VM | Where powerstate -eq Poweredon | measure-object -property numcpu -sum | select -expandproperty sum

Host = $vHost.name

pCores = $vHost.ExtensionData.Summary.Hardware.NumCpuCores

vCPU = $VMcore

vCPUtoCore = “$VMcore : $($vHost.ExtensionData.Summary.Hardware.NumCpuCores)”

}

}

As a rule of thumb, a ratio of 4:1 is a safe way to ensure that your VMs won’t be likely to encounter CPU contention. However, it depends on the workloads the host runs. A highly transactional DB, for example, may become a “noisy neighbor” if you place it on a densely populated host, hindering the performances of all the other VMs and vice versa.

On the other hand, a host running VDI desktops could easily go up to 10:1 without taking a huge risk.

As mentioned before, if you are unsure of your workloads’ concurrency, a 4:1 ratio will be a sensible figure to start with.

Clock speed and turbo mode

If you look at the Intel ARK website you will see that the clock speed decreases as you add more cores. It doesn’t mean you can’t go over 2GHz in normal mode on 24 cores CPUs but the prices get a little bit out of hand. Those units crossing the $10K line are usually considered in rather specific cases and not when sizing a regular virtual infrastructure (unless your nickname is “The Scale-up King”).

As it was mentioned, the number one criteria of most mixed workload environments is concurrency. 20 cores at 2.1GHz will probably serve you way better than 10 cores at 3.4GHz. Yet it doesn’t mean that you will never need more than 2.1GHz.

Lots of workloads can be spikey at a time and go crazy on CPU usage during an export or an indexing operation, in this case, 2.1GHz may throttle the VM and delay the execution time.

This is where Turbo mode comes into play. Intel and AMD both have their own approach of the feature and their own dramatic name. In effect, it will allow the CPU to overclock in order to satisfy the increased demand according to different factors like:

- Type of workload

- Number of active cores

- Estimated current consumption

- Estimated power consumption

- Processor temperature

Here is what VMware says about Intel’s turbo mode:

“Intel Turbo Boost, on the other hand, will step up the internal frequency of the processor should the workload demand more power and should be left enabled for low-latency, high-performance workloads. However, since Turbo Boost can over-clock portions of the CPU, it should be left disabled if the applications require stable, predictable performance and low latency with minimal jitter.”

I personally think that you will benefit from leaving Turbo Mode enabled as it will allow your CPU spikes to be satisfied if the other cores are not too busy with other VMs.

Number of CPU sockets

If you look at the preconfigured server builds you will very often see that they come with 2 CPU sockets. Yet it may not offer the best value for you, especially if you haven’t got huge needs in terms of memory. It will very much depend on what you think of the following few chapters and where you stand in the age-old “Scale-up vs Scale-out” debate.

Memory and PCI lanes requirements

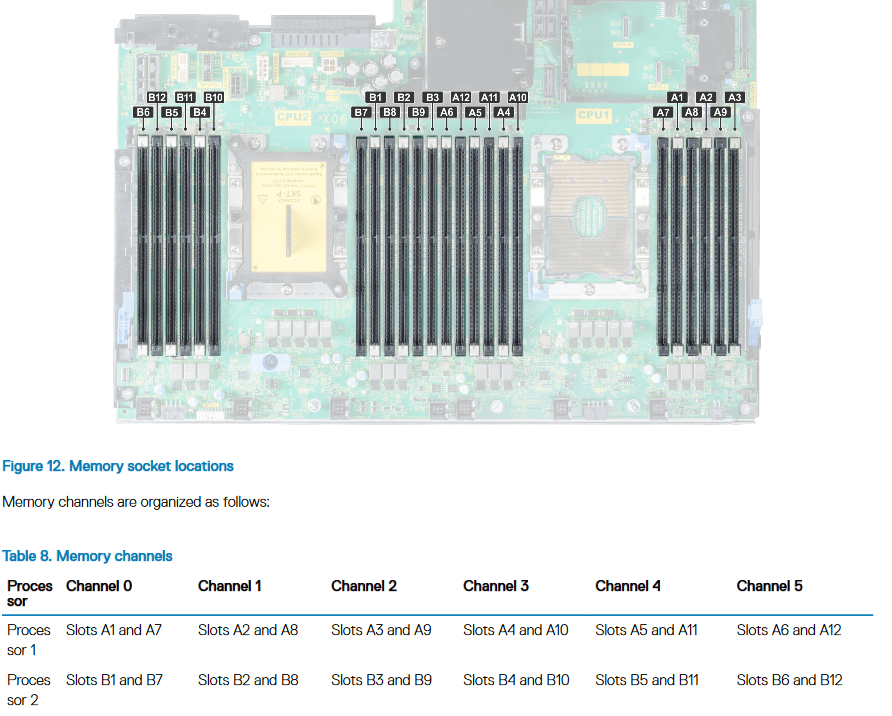

The memory slots on modern servers are split between processors, half of it is accessible by one CPU, the other half accessible by the second one. It goes on the same way for 4 sockets servers. The same also usually applies to PCI lanes. If you only have one CPU socket populated, some PCI slots may not be available just like the memory slots.

This means that your memory requirements may force you to go for a dual CPU configuration. If you don’t need more than 384GB per server you will almost always be able to go single CPU.

Take the Dell R740 as an example. You can see on the excerpt above that the A memory slots of the right-hand side are accessed by Processor 1 and B slots on the left are accessed by processor 2. The technical guide also mentions what will be unavailable in terms of PCI IO in a single socket configuration.

With Single CPU configuration, any Riser1 (1A/1B/1C/1D) card and only Riser 2B will be functional.

Licensing costs

As you probably know, vSphere products are licensed per CPU socket and are usually not cheap. If you don’t have needs for huge amounts of memory per server, there are great savings to be made by going for a single socket server.

Let’s translate the cost of CPU and licenses into $/core to get an idea of the difference between single and dual socket. The comparison below will take into account 24 cores.

Single Socket : 1 x Intel® Xeon® Platinum 8168

- 24 cores @ 2.70GHz

- 1 x $5890.00

Dual Socket : 2 x Intel® Xeon® Gold 6136

- 2 x 12 cores @ 3.00GHz

- 2 x $2460.00

vSphere Enterprise Plus license with 3 years support : $7818.29

Note that these are the public prices so the hit may be softer if you get a discount via your retailer.

Single socket version: $13708.29 > $571 / core

Dual socket version: $20556.58 > $856 / core

You do get 7.2GHz per server more in the single socket version, but is it worth the $6850 extra?

If you don’t have specific needs for all PCI or memory lanes, you should definitely consider going single socket.

Keep in mind that most VMware products are licensed per CPU socket, meaning that if you go for dual CPU servers you will have to pay double for each product like vRops, VSAN, NSX…

Failure domain

A failure domain represents a section of the environment that could be negatively affected and failed by a hardware issue. In this particular use case, failure domains are the physical servers running our VMs.

The bigger the server, the more VMs can run on it, the bigger the failure domain.

After reading the chapter about the physical cores, it would be natural to think “Hell I’ma throw 50 cores per server so I they run 200 VMs each”.

Well, you sure will optimize the cost of your servers but the day you lose one for some reason, you lose 200 VMs at once. 200 VMs to be restarted on the surviving hosts in the cluster. Not ideal is it?

Now there is no right or wrong answer to how you should size your failure domains, this is the famous “scale-up vs scale-out” debate I was referring to. At the end of the day, it will depend on your needs and what you feel comfortable with.

What risk am I willing to take?

As it was mentioned above, the bigger the failure domain, the more VMs will go down with the host the day it goes awol. If you are a DRS freak who doesn’t miss a single anti-affinity rule you could get away with big failures as you will have redundant VMs running on other hosts. If you have a lot of critical VMs that are not clustered like legacy software, chances are that you will want to be more careful and not go crazy on the VMs density of your hosts.

How critical are my workloads?

There are a lot of workloads out there that would not mind much if the server went down. Examples of this might be VDI desktops, containers or other stateless services that can be safely killed and quickly restarted or recreated somewhere else. If your environment is big on this type of workload you can start looking at increasing your VM density.

What is my budget?

CPU and memory make up most of the price but smaller failure domains mean more servers so more hardware to pay for (chassis, motherboards, nics…) and potentially more licenses according to the CPU models (core count) you choose hence a higher $/core.

The Balance between CPU and memory

Another tricky part of sizing a host is to make sure that you will make the most of its resources, otherwise, you are just burning the budget.

You can quickly get carried away on Dell’s configurator and throw 654GB of ram at your servers with only 12 cores of CPU. Sure if you have monster VMs that require 256GB of ram with only 4 vCPU that’ll do just fine but you don’t see those very often.

The issue here is that you will quickly run out of available CPU while barely using 25% of your memory and it will be shameful to look at the vSphere web client resources usage. Of course, the opposite is true if you pick a monster 56 core CPU combo with 128GB of RAM. Though it will be easier to scale up as it’s easier to add memory to a server than CPU.

It may sound obvious but it is easier said than done, it can be very tricky to find the right balance. What is suited for one environment may not be for the next one?

Again there is no universal answer here, sorry. Still, I found that for regularly mixed workloads, a single 20 core CPU with 320GB of ram was a pretty good balance between CPU, ram, and cost.

Case study

Let’s take an example to aggregate all this into factual information. We are going to try and size servers for the following environment:

- 250 VMs

- 2 vCPU per VM in average

- Peak CPU usage: 300GHz (pretty unlikely.)

- 6GB of ram per VM in average

- vCPU:pCore ratio of 5:1 in average

- 1 host failure to tolerate

Required physical cores: 250 VM x 2 vCPU / 5 = 100 cores

Required memory: 250 x 6 + 10% = 1650GB

Given the cost of the vSphere licenses, we are going for single-socket servers here as we don’t have explicit needs to do otherwise and it would bump the price considerably.

Let’s simulate what it would look like with a different number of hosts in the cluster. The prices you will see for each model only reflect the cost of CPU and vSphere Ent Plus licenses with 3 years support, it doesn’t take into account the price of the server. Note that the CPU calculations exclude the failover host.

| #Hosts | 4 | 5 | 6 | 7 | 8 |

| License cost | $31 200 | $39 000 | $46 800 | $54 600 | $62 400 |

| Memory per host | 413 | 330 | 275 | 236 | 206 |

| #Core per host | 25 | 20 | 17 | 14 | 13 |

| #VM per host | 63 | 50 | 42 | 36 | 31 |

| #VM per host + failover host | 50 | 42 | 36 | 31 | 28 |

| License + CPU cost | 52600 | 63270 | 65485 | 74840 | |

| $ / core | €526 | €620 | €668 | €720 | |

| $ / VM | €210 | €253 | €262 | €299 |

4 Hosts => No

413GB won’t be supported in a single CPU configuration and 50 VMs per servers with the failover host included is way too big a failure domain in most cases, you won’t sleep well at night…

5 Hosts => $52600

That one is more realistic as you’ll be able to get 330GB out of one processor and 20 cores CPUs are rather affordable. Running 42 VMs per host is quite dense but if you are comfortable with it, it could be good value for you. It is the cheapest solution.

The Intel Xeon Gold 6138 would be a good fit with 100 cores and 200GHz in total. That is if you are ok with cores running at 2.00GHz in normal mode.

6 Hosts => $63270

Here is a cluster that doesn’t look bad at all. The failure domains are sensible and the costs shouldn’t be through the roof.

I would probably go for the Intel Xeon Gold 6140 in this case. You get 108 cores and 248.4GHz.

It is indeed pricier than the previous one but you get smaller failure domains for the price.

7 Hosts => $65485

In this scenario we are getting even smaller domains, hence reducing the size of each host. The peace of mind you get here may come at a reasonable price until you start adding other VMware product and scaling out the cluster.

We can pick the Intel Xeon Gold 5120 for this one giving us 100 cores and 215GHz.

8 Hosts => $74840

The mother of safety with failure domains reduced to 31 VMs per host (excluding failover host). You do get extra cores and GHz with respectively 246.4GHz and 112 cores but for a hefty price compared to the other designs.

This short case study shows that $/core and $/VM can be an interesting metric and gives us a little more information about how to choose a configuration for the virtual infrastructure. My preference goes to the 6 server configuration.

Even though it is almost the same price as 7 servers which is not a bad deal either, you will save money as you scale out your environment with regard to the $/core and even more when you add VMware products like vRops. The fact that you get a few extra cores and GHz is also a good deal.

I personally wouldn’t go for the 5 server configuration as the failure domains are just too big for me. But again it is just my opinion, you might very well think differently. The 8 server configuration is a bit of a waste of money in my opinion in regard to the cost of the vSphere licenses.

Conclusion

Choosing a CPU configuration for virtualization hosts is no easy thing to do. Lots of variables come into play and you quickly realize that it is not just about the number of GHz or the generation of the processor. Sure you can get any of them and buy more hardware as your needs evolve but chances are you’ll be throwing money out the window doing it this way.

Follow our Twitter and Facebook feeds for new releases, updates, insightful posts and more.