Today’s storage systems are much less expensive than in previous days for large amounts of storage. Yet, ultra-expensive SANs and other storage systems still represent a significant investment for organizations. Making the most efficient use of the provisioned storage space is critical as well as reclaiming storage space that is no longer being used.

With the virtualized file systems that are utilized by hypervisors today such as VMware’s VMFS, there is a layer of abstraction from the physical hardware’s knowledge of how much storage is in use and available. VMware specific environments can make use of a mechanism called UNMAP to reclaim blocks that are no longer used, especially when thin provisioning is at play.

Now, let us see:

What is VMware UNMAP? and

Why is “unmapping” blocks needed especially when thin provisioning is utilized?

What is VMware UNMAP and Why Is It Needed?

VMware UNMAP is a command that allows a way to reclaim space from blocks that have already been written to after data that previously resided on those data blocks has been deleted. This deletion can come from either some type of application or the guest operating system.

When making use of a directly attached storage array and utilizing thin provisioned disks with VMware VMFS, when data is deleted with thin provisioning, the storage array is unaware of that deletion. The storage array still “sees” that data as being allocated. The layer of abstraction that is provided by VMFS introduces this unique “problem” that keeps the storage array from seeing the actual capacity that truly exists after file deletions take place.

We have mentioned “thin provisioning”. But, what is thin provisioning and how does this concept weigh into the need for the UNMAP command?

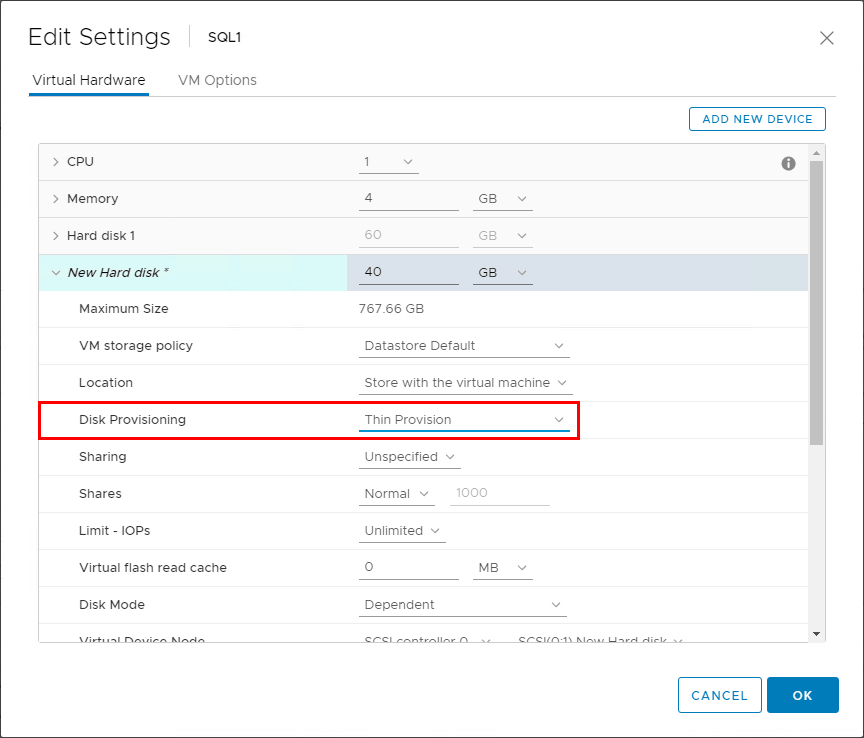

When you thin provision a virtual disk in the case of VMware VMFS, only the space that is used is written out. So, even if you provision a VM with a 40 gig hard drive, if only 5 gigs are written on the disk, only those 5 gigs will be reflected in space that is actually used. This is an important concept to understand as it relates to the UNMAP command. Once the blocks are “written” by VMFS with thin provisioning, even if the files are deleted in the guest operating system, these blocks are not automatically reclaimed without the VMware UNMAP process.

Adding a thin provisioned disk to a VMware virtual machine

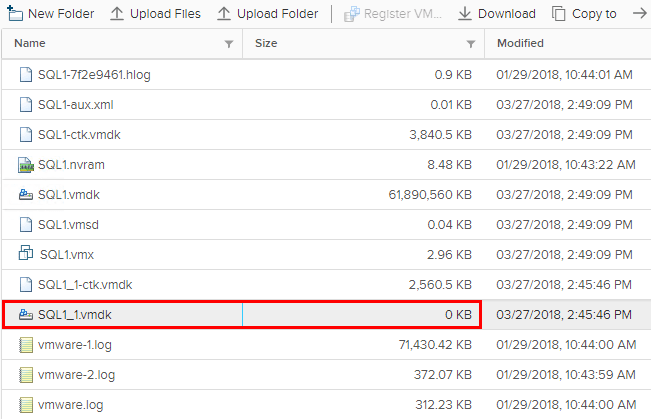

Following the previous screenshot adding the 40 gig hard disk to the virtual machine, when browsing the datastore, the disk is showing 0 bytes. This is because there is no data that has been written to the virtual disk. This is the classic characteristic of thin provisioning a virtual disk.

Thin Provisioned disk shows 0KB size on the datastore even though it is a 40 gig disk

The VMFS UNMAP operations inform the storage array that the blocks that it still has allocated as being in use are now able to be unmapped and the space reclaimed. With VMware vSphere 6.0 the unmap command was run manually or via a VMware CRON job, where you can either run the command manually or you can schedule to have the unmap command run at the desired time to unmap blocks on a storage array.

You can manually run the command with the following command with the identifier for your datastore in question:

- esxcli storage vmfs unmap -u 4532d832-4ff42822-50eg-d92g77fd8d49

If you run the command against a datastore that does not support the unmap functionality, such as DAS volumes, you will see the following error:

- Devices backing volume

do not support UNMAP

CRON job

With vSphere 6.0 to schedule a CRON job, you edit the cron job found at the location:

- /var/spool/cron/crontabs/root

#min hour day mon dow command

1 1 * * * /sbin/tmpwatch.py

1 * * * * /sbin/auto-backup.sh

0 * * * * /usr/lib/vmware/vmksummary/log-heartbeat.py

*/5 * * * * /sbin/hostd-probe ++group=host/vim/vmvisor/hostd-probe

00 1 * * * localcli storage core device purge

30 12 * * * esxcli storage vmfs unmap -u 4532d832-4ff42822-50eg-d92g77fd8d49

After modifying the CRON job, you need to restart the crond process like so:

kill -HUP $(cat /var/run/crond.pid)

/usr/lib/vmware/busybox/bin/busybox crond

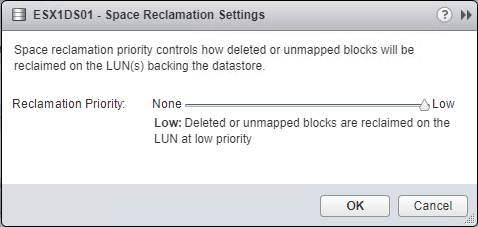

After existing in vSphere 5.0 and then being withdrawn, the automatic unmap command was brought back with vSphere 6.5. If you look at the properties of a VMFS 6 formatted datastore in vSphere 6.5, you will notice the Space Reclamation setting that can be edited.

Space Reclamation priority can be set to the desired configuration

The importance of the unmapping command becomes very crucial when thinking about expensive SAN storage. Many customers utilizing various SAN models for backend storage have been surprised to see space that can be reclaimed by issuing the UNMAP command. Some may gain back as much as 75% of their SAN storage or more if there has been a great deal of churn with file/VM deletion.

Thoughts

The VMware UNMAP command is a great utility that allows reclaiming, in some cases, massive amounts of storage space on very expensive SAN storage. VMware administrators need to familiarize themselves with this command and learn the ins and outs of the command depending upon the version of vSphere in use. VMware vSphere 6.0, for instance, requires the command to be run manually or scheduled through CRON or some other means. VMware vSphere 6.5 has brought back the automated built in mechanism that allows the datastore to automatically be UNMAP’ed as long as the requirements are met, such as having a VMFS 6 datastore. By reclaiming thin provisioned blocks that have been deleted, capacity can be greatly extended. Understanding the benefits of thin provisioning as well as the extra administrative processes required to efficiently operate thin volumes such as the UNMAP command, helps administrators effectively make use of and capitalize on the benefits of thin provisioning.

Follow our Twitter and Facebook feeds for new releases, updates, insightful posts and more.